GPT-3 is a set of advanced language models developed by the OpenAI team, which is a research laboratory based in San Francisco that specializes in Artificial Intelligence. The initialism "GPT" stands for "Generative Pre-Trained Transformer," and the "3" indicates that this is the third generation of these models.The GPT models are transformer neural networks. The transformer neural network architecture uses self-attention mechanisms to focus on different parts of the input text during each processing step. A transformer model captures more context and improves performance on natural language processing (NLP) tasks.GPT-3: understanding its potential. Autoregressive language models have become a dominant trend in the Artificial General Intelligence (AGI) field: by leveraging deep learning, GPT-3 is able to perform a wide range of tasks.

What programming language is GPT-3 : The core of Chat GPT is based on the GPT architecture, which was originally developed using the Lua programming language with the Torch framework. However, subsequent versions of GPT, including GPT-3 which Chat GPT is based on, were developed using Python and PyTorch.

Is GPT-4 a language model

Generative Pre-trained Transformer 4 (GPT-4) is a multimodal large language model created by OpenAI, and the fourth in its series of GPT foundation models.

Is ChatGPT a large language model : We discuss next why we suddenly start speaking about pre-training and not just training any longer. The reason is that Large Language Models like ChatGPT are actually trained in phases. Phases of LLM training: (1) Pre-Training, (2) Instruction Fine-Tuning, (3) Reinforcement from Human Feedback (RLHF).

In their announcement, OpenAI branded it as a language model. And 95% of its success is due to the power of the large language model behind it. However, ChatGPT does things that language models simply can't do — anyone familiar with language models knows that chat history simply isn't possible.

While GPT focuses on generating text, NLP encompasses a wider range of tasks such as speech recognition, language translation, sentiment analysis, and text summarization. NLP also involves the use of various techniques and algorithms, including machine learning, to process and understand human language.

Is GPT-3 a deep learning model

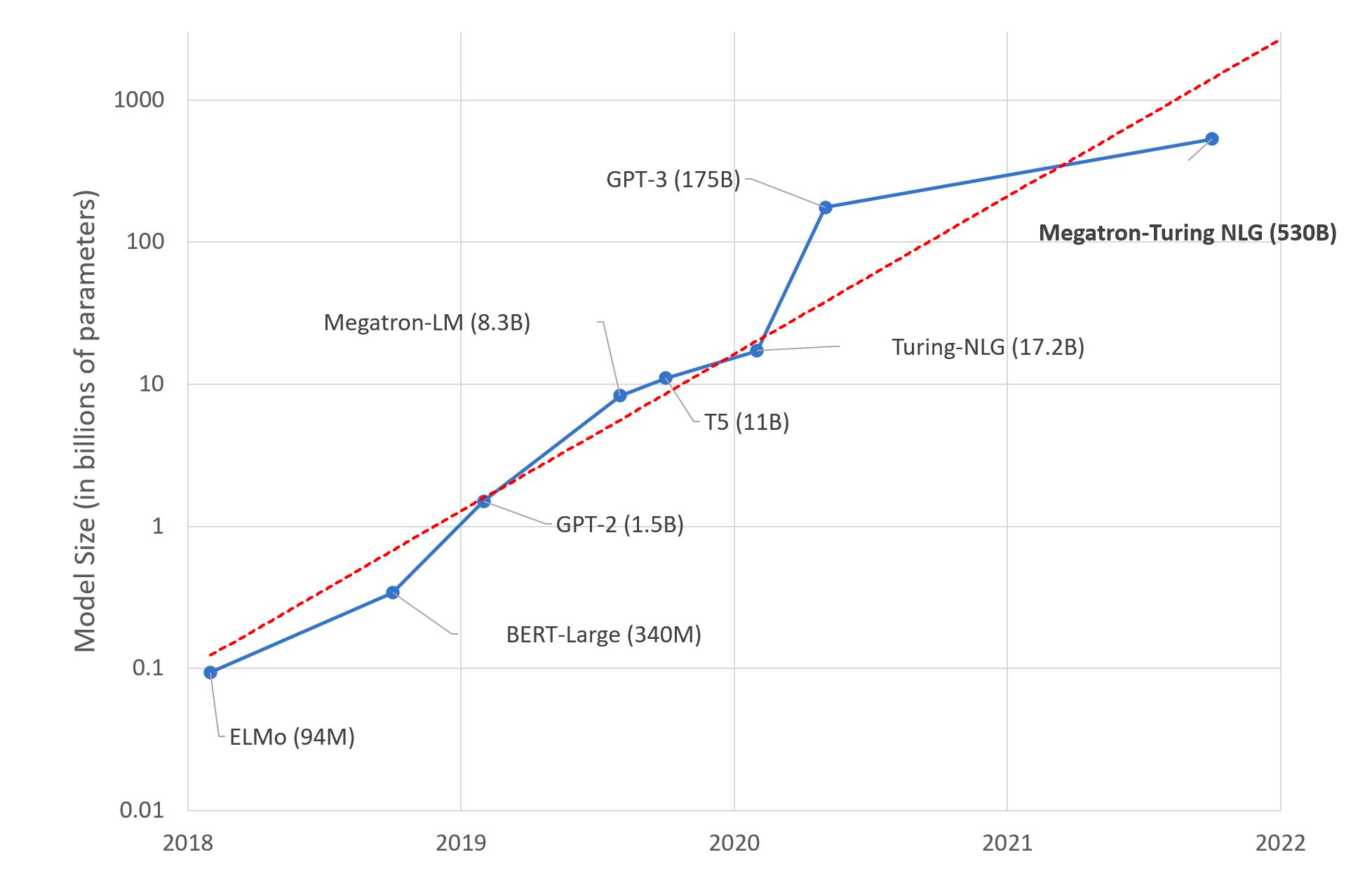

GPT models are transformer-based deep-learning neural network architectures. Previously, the best-performing neural NLP models commonly employed supervised learning from large amounts of manually-labeled data, which made it prohibitively expensive and time-consuming to train extremely large language models.This model was the latest in a line of large pretrained models designed for understanding and producing natural language by using the transformer architecture, which was published only 3 years prior and significantly improved natural language understanding task performance over that of models built on prior …GPT-5 might arrive this summer as a “materially better” update to ChatGPT | Ars Technica.

However, in a decoder-only transformer like GPT, the attention mechanism is “masked” to prevent it from looking at future parts of the input when generating each part of the output. This is necessary because GPT models are trained to predict the next word in a sentence, so they should not have access to future words.

Is ChatGPT not a language model : In their announcement, OpenAI branded it as a language model. And 95% of its success is due to the power of the large language model behind it. However, ChatGPT does things that language models simply can't do — anyone familiar with language models knows that chat history simply isn't possible.

Is ChatGPT an NLP or LLM : ChatGPT, possibly the most famous LLM, has immediately skyrocketed in popularity due to the fact that natural language is such a, well, natural interface that has made the recent breakthroughs in Artificial Intelligence accessible to everyone.

Is ChatGPT based on large language models

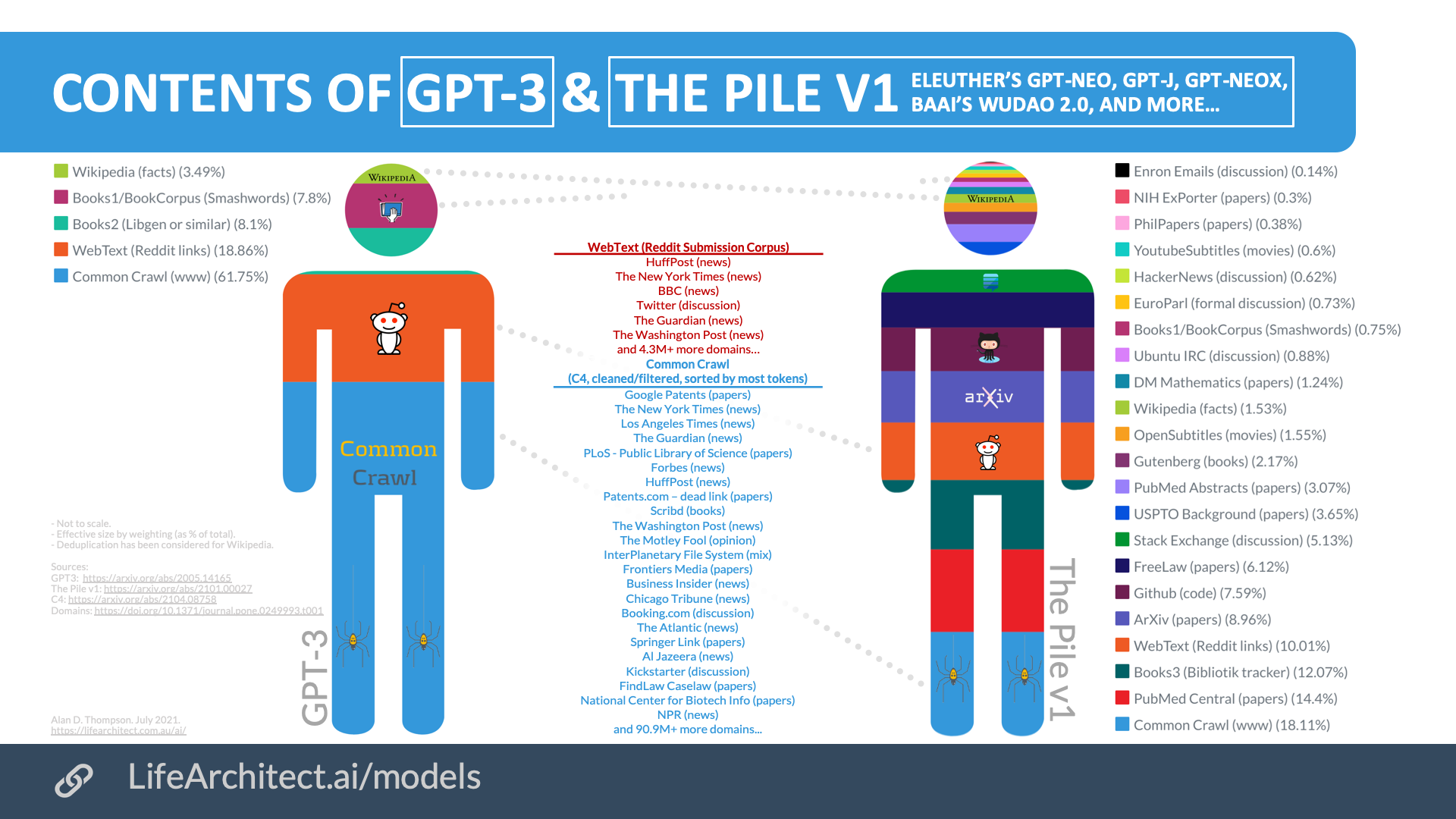

Large language models like ChatGPT learn to generate text on their own through a process called deep learning. This involves training the model on a large dataset of text, such as books, articles, and conversations, in order to learn the patterns and structure of language.

The model size of ChatGPT can vary, and it is typically quantified in terms of the number of parameters. For instance, the initial version of ChatGPT, known as gpt-3.5-turbo, had 175 billion parameters. OpenAI has continued to evolve the ChatGPT model, and subsequent versions may possess even larger parameter sizes.ChatGPT is an NLP (Natural Language Processing) algorithm that understands and generates natural language autonomously. To be more precise, it is a consumer version of GPT3, a text generation algorithm specialising in article writing and sentiment analysis.

What is the difference between ChatGPT and NLP : Launched in November 2022, ChatGPT differentiates itself from other NLP options by being an advanced natural language tool that uses artificial intelligence to generate accurate and coherent responses in real time.